The Connectionist Approach

Oscillations is a cycle of three pieces, including narrated and sung voice, piano, toy piano, electronics, and visuals. Drawing inspiration from the song cycle Winterreise, written by W. Müller and set to music by F. Schubert, Oscillations reimagines selected songs from the cycle, exploring their emotional landscapes through a personal lens and compositional prism. On one hand, the musical and literary content of the original cycle serves as a prompt for artificial resynthesis using AI techniques. On the other hand, personal evocations are reimagined as a new narrative for the poetry, a process I view in analogy to prompting, but using the human mind as a latent space. The work highlights questions of distinctiveness and similarity between human and AI creativity, focusing particularly on the intuitive and exploratory aspects of the creative process.

In this project, I collaborated with the Bergen-based artist Andrea Urstad. She was in charge of the visual narratives –in the form of video and projections. More about Andrea can be found at https://www.andreatoft.com/

Chapter Overview

-

Connectionism

- Artificial Neural Networks

- Intuitive Computers / Rational Composers

-

Oscillations

- Schubert-Müller’s Winterreise

- Ekphrasis and Misreading

- Creative Process

- (Ongoing) Reflections

Connectionism

“Events that co-occur in space or time become connected in the mind. Events that share meaning or physical similarity become associated in the mind. Activation of one unit activates others to which it is linked, the degree of activation depending on the strength of association”.

In the quote above, Peter Gärdenfors effectively captures the essence of connectionism, highlighting the idea of associations within the mind between co-occurring events. Rooted in the longstanding philosophical theory of associationism, * In philosophy, associationism explains mental processes as the result of associations between ideas. Emerging from Aristotle’s early ideas on principles of contiguity, similarity, and contrast as mechanisms of memory and learning, it gained prominence in modern philosophy during the 17th and 18th centuries with British empiricist philosophers, notably John Locke (see John Locke, An essay concerning human understanding (1948)). Critics to these theories argue that it is overly reductive, reducing thought to mechanical links between ideas while neglecting higher-order processes like reasoning and problem-solving. Noam Chomsky, for example, has highlighted its failure to consider innate cognitive structures that guide perception and language acquisition (see Noam Chomsky, Syntactic structures (Mouton de Gruyter, 2002)). Additionally, Vygotsky and others challenge the universality of associationist principles, highlighting the role of social interaction and context in learning (see Lev S Vygotsky, Mind in society: The development of higher psychological processes, vol. 86 (Harvard university press, 1978)). These limitations have spurred the development of more nuanced theories in cognitive science, such as connectionism, which addresses the gaps left by traditional associationist views. connectionism links learning to thought based on the principles of an organism’s causal history. In essence, it posits that thoughts become linked through an organism’s past experiences, which then serve as the primary shaper of its cognitive architecture. connectionism builds on this foundation and spans fields such as AI, cognitive science, and neuroscience. Some theorists that have investigated connectionist systems to model human thought are, for example, James L. McClelland, David E. Rumelhart, Stephen Grossberg, * Neural Networks and Natural Intelligence, ed. Stephen Grossberg (The MIT Press, 1988). https://doi.org/10.7551/mitpress/4934.001.0001. and Geoffrey Hinton. * Geoffrey Hinton is one of the pioneering figures in neural networks and deep learning. His contributions have been instrumental in advancing the understanding of how connectionist models can replicate aspects of human cognition. In 2023, Hinton resigned from Google, expressing concerns about the potential dangers posed by new deep learning technologies and the lack of adequate control and regulation over their development and deployment. In 2024, Hinton was awarded with the Nobel Prize in Physics.

Connectionism aims to represent and model the human mind as emerging from networks of single interconnected units. * Christoph Lischka and Andrea Sick, Machines as agency: artistic perspectives, Schriftenreihe ... der Hochschule für Künste Bremen; 4; 04, (Bielefeld, Piscataway, NJ: Transcript; Distributed in North America by Transaction Publishers, 2007). In order to explain cognition using connectionist models, information is represented as distributed patterns of activity over these interconnected networks of processing units, and the process of learning occurs through modifications of the strength of connections between these units. * John N Williams, "Associationism and connectionism," in Encyclopaedia of Language and Linguistics: Second Edition, ed. K.E. Brown (Oxford: Elsevier, 2005). Several different kinds of connectionist models found in the literature can be classified based on their architecture –inner structure– or modes of learning. For instance, in some models, the units of a network may represent neurons, with connections representing synapses. In other models, each unit might represent a word, with connections indicating semantic similarity. * Tomas Mikolov, "Efficient estimation of word representations in vector space," arXiv preprint arXiv:1301.3781 (2013). The structure of the connections and units varies from one model to another. However, the most common model in connectionism is the neural network. Connectionism uses these networks to represent knowledge and concepts as a distributed representation across connections.

Artificial Neural Networks

Artificial Neural Networks (ANNs) are composed of numerous simple and highly interconnected units. While ANNs are inspired by the brain’s neural architecture, it is important to acknowledge that they are not directly comparable. Significant differences exist between biological neurons and their artificial counterparts, and drawing a one-to-one parallel between human neural networks and ANNs is not correct. * See for example: Thomas Wood, "How similar are Neural Networks to our brains?", Fast Data Science (2022). https://fastdatascience.com/ai-in-research/how-similar-are-neural-networks-to-our-brains/.

These single units are traditionally named neurons, and they process information in parallel, in contrast to most symbolic models where the processing is serial. * David E. Rumelhart, James L. McClelland, and PDP Research Group, Parallel Distributed Processing, Volume 1: Explorations in the Microstructure of Cognition: Foundations (The MIT Press, 1986). https://doi.org/10.7551/mitpress/5236.001.0001. Each unit receives some information as input and then transmits it to other units according to some mathematical function –usually nonlinear. There is no central control unit for the network; rather, all neurons function as individual processors. The units have no memory in themselves, but earlier inputs are represented indirectly via the changes in weights * Weights in neural networks are numerical values that represent the strength and influence of connections between neurons. they have caused. As the changes in weights are slower than the changes in the inputs, neural networks are less sensitive to noise. * Noise is any unwanted random disturbance or interference that distorts or disrupts the transmission and processing of a signal, affecting the clarity and accuracy of the information being communicated. Weights in a neural network determine the strength and influence of each input on the output, with higher weights amplifying the input’s impact and lower weights reducing it. The inputs to the network also gradually change the strength –the weights– of the connections between units according to some learning rule.

ANNs have been developed for diverse tasks, for example, for prediction or classification purposes * In Data Science, prediction and classification are two fundamental concepts used for analyzing data and making informed decisions. Prediction involves estimating a continuous outcome based on input data. For example, predicting the future stock price, temperature, or sales revenue based on historical data and other influencing factors. The goal is to forecast a numeric value. Classification, on the other hand, involves assigning categorical labels to input data. It’s about sorting data into predefined classes or groups. For example, classifying emails as spam or not spam, determining if a tumor is benign or malignant, or categorizing customer feedback as positive, neutral, or negative. in fields such as computer vision, image classification, and language processing, among others. However, nowadays, one of the most relevant topics in contemporary societies –that of generative AI– is based mainly on ANNs. Recent advancements in this field, especially those systems known as Large Language Models (LLMs) like ChatGPT, utilize complex and multi-layered networks to achieve the increasingly impressive results that shock us every day. * GPT stands for “Generative Pre-trained Transformer,” and it refers to a type of language model that has generative capabilities, it is pre-trained on vast amounts of text data and utilizes a neural architecture known as transformer to understand and generate human-like text. For a comprehensive survey of ChatGPT-related research and its applications across various domains see Yiheng Liu et al., "Summary of chatgpt-related research and perspective towards the future of large language models," Meta-Radiology 1 (2023), 2, https://doi.org/10.1016/j.metrad.2023.100017.

Some of the drawbacks of using ANNs of the type described here for representing information are that ANNs learn slowly, and they need huge amounts of data for effective learning. Usually, these models require large-scale computational infrastructure, which leaves a significant carbon print. * Ahmad Faiz et al., "LLMcarbon: Modeling the end-to-end carbon footprint of large language models," arXiv preprint arXiv:2309.14393 (2023). Another problem is that ANNs are essentially fitted for a single domain, for example, visual or speech recognition, mathematical prediction, input classification, etc. An assumption that is implicitly made when constructing an ANN is that there is a given domain for the receptors of the network. The restriction to a particular domain turns out to confine the representational capacities of ANNs. In general, a network cannot generalize what it has learned from one domain to another. * However, in recent times, this is changing at a fast pace. For example, recent research in adversarial neural networks, transformers, and transfer learning techniques enhances ANNs’ ability to generalize across domains, allowing them to apply learned knowledge from one area to another more effectively. See for example Jindong Gu et al., "A survey on transferability of adversarial examples across deep neural networks," arXiv preprint arXiv:2310.17626 (2023).

There are two types of a learning process for an ANN: supervised and unsupervised. In the supervised mode, the inputs are matched to a target, which is then mapped as the expected output for a similar type of input. An unsupervised learning process means that the inputs are not matched with an expected output. Rather, the network should find some similarities and recurrent patterns in the data that should provide it with enough knowledge, for example, to classify the inputs into a certain category.

When discussing the concepts of latent space and embedded space that I mentioned in the ‘Multidisciplinary Insights’ chapter, we are essentially referring to how ANNs represent information: as high-dimensional spaces defined by the activity of neurons and the connections between them. Unlike low-dimensional state spaces used in symbolic systems, such as constraint algorithms, the dimensions of an ANN are not easily interpretable by a human observer.

Neural Networks in Music

In the field of music, the use of ANNs can be generally divided into two main trends: On one hand, music information retrieval models aim to design models capable of recognizing temporal structure and semantics present in music inputs; * Keunwoo Choi et al., "Convolutional recurrent neural networks for music classification," Proceedings of the IEEE International conference on acoustics, speech and signal processing (ICASSP), (2017). on the other hand, as generative models aimed to generate new music. For example, the systems AIVA, * https://www.aiva.ai/ Magenta, * https://magenta.tensorflow.org/music-transformer or MuseNet. * https://openai.com/index/musenet/. In both cases, these systems rely on a learned dataset * A dataset is a structured collection of data, often organized in tables, arrays, or files, that is used for training neural networks or other machine learning algorithms. of preexisting musical data. These datasets can consist of musical symbolic representations, such as the information encoded in a musical score or audio samples.

In generative music systems, there are differences in complexity and capabilities that affect their outcomes. Simple feedforward networks * In feedforward neural networks, information flows in one direction, from input to output, through one or more layers of neurons, without recurrences or feedback loops. generally focus on basic pattern recognition, whereas deep learning * Deep learning refers to the use of neural networks with many (often dozens or more) hidden layers of neurons. models use a layered architecture of neurons to capture more complex patterns and longer temporal dependencies in data. Additionally, deep learning models generally require substantial data and computational power for the training phase, which is usually done using Graphic Processing Units (GPUs) or Tensor Processing Units (TPUs).

Normally, generative systems based on ANNs primarily serve roles outside of compositional artistic purposes. They largely generate music for functional purposes. This is evident for some systems mentioned above, for example, that are geared toward producing music for adaptable purposes in different styles. The artistic depth of such output is less compelling and appears more “instrumental” than expressive. However, some music has been composed using ANNs for artistic and investigative purposes.

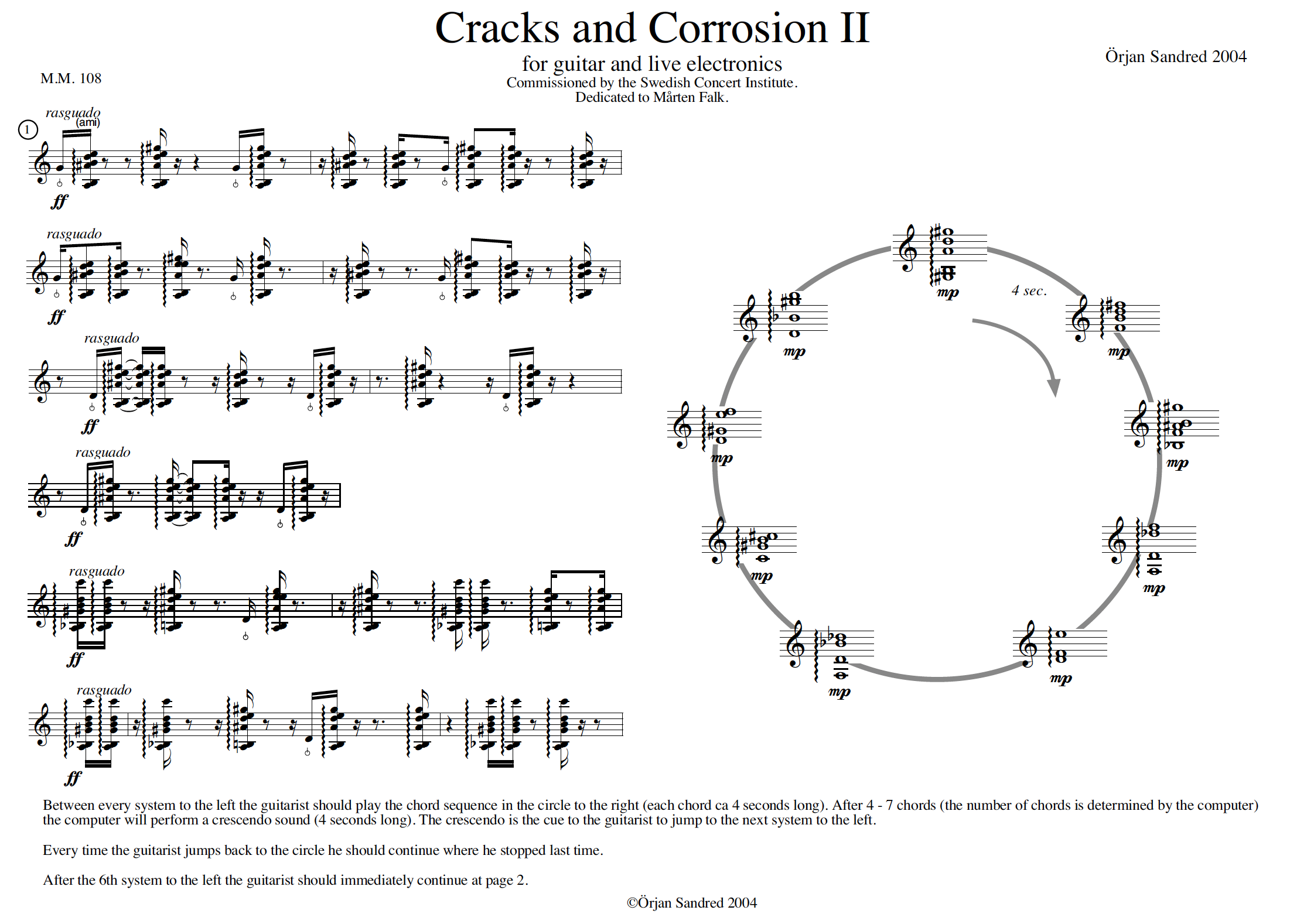

An interesting example is Örjan Sandred’s Cracks and Corrosion II for guitar and electronics (2004). In this piece, an ANN is employed for timbre detection: the system analyzes the guitar’s spectrum and sends the data to the ANN that classifies the sound based on its timbral qualities –for example, more or less noise. * Previous research on this issue can be found for example in Tae Hong Park, "Towards automatic musical instrument timbre recognition" (Princeton University, 2004). The outcome of this analysis triggers different electronic samples depending on the timbre detected. For instance, the playing technique of rasgueado on the guitar will prompt different sounds in the electronics compared to normal playing.

Neural Networks and Intuition

Both types of ANNs –supervised and unsupervised– share certain commonalities. For instance, the model isn’t explicitly taught rules about the nature of its input. Instead, the ANN examines data, identifying repetitions and making generalizations: it detects recurring patterns within the observed data. Rather than being given rules, the model infers them from experience. It generalizes from its training, discerns patterns, and makes predictions, or classifies.

Wait. That sounds familiar. I wrote almost the same words when I discussed intuition in the chapter ‘multidisciplinary Insights:’

“Intuition, on the other hand, involves arriving at conclusions or insights without the conscious use of formal rules or explicit reasoning. It often feels like an immediate awareness of a solution, idea, or decision. (…) Intuition is mainly based on tacit knowledge, pattern recognition, generalization, and subconscious processing of information.”

Is AI intuitive, then?

Herbert Simon, * Herbert A. Simon was an influential American economist, cognitive psychologist, and computer scientist who received the Nobel Prize in Economic Sciences in 1978 for his pioneering research on decision-making processes within economic organizations. in the early 2000s, proposed that AI had reached a point where it could replicate the mechanisms of human intuition. * Herbert A Simon and C Mellon, "Explaining the ineffable: AI on the topics of intuition, insight and inspiration," IJCAI (1) (1995); Roger Frantz, "Herbert Simon. Artificial intelligence as a framework for understanding intuition," Journal of Economic Psychology 24, no. 2 (2003/04/01/ 2003), https://doi.org/10.1016/S0167-4870(02)00207-6, https://www.sciencedirect.com/science/article/pii/S0167487002002076. He argued that the processes underlying human decision-making and problem-solving, often attributed to intuition, could be modeled and simulated by AI systems. Simon’s claim was proposed a long time ago. Visions around AI have changed, in particular after the emergence of LLMs * LLM stands for large language models. LLMs are a type of artificial intelligence model designed to understand and generate human language. They are trained on vast amounts of text data to learn patterns, grammar, context, and even some level of reasoning. LLMs can perform a wide range of language-related tasks, such as text generation, translation, summarization, and question-answering. and subsidiary gen-AI systems. Currently, the discussion about intuition in AI is focused, in particular, on the commonalities between the learning process of LLMs and humans.

K. Hayles, for example, has proposed that the replication of cognitive mechanisms in LLMs models is an uncanny reproduction of our associative and rewarding learning process, * K. Hayles’ presentation “Do LLMs Understand Humans and Their Questions, or Are They ‘Stochastic Parrots’?” (at the symposium “The Only Lasting Truth is Change,” organized by Bergen Senter for Elektronisk Kunst (BEK), November 18, 2023), revolved mainly around this issue. which is largely reliant on semantic indexing. * Semantic indexing is a technique in data science focused on identifying patterns in the relationships between terms and concepts within a text. It operates on the principle that words appearing in similar contexts tend to have similar meanings. A key feature of Latent Semantic Indexing (LSI) is its ability to extract the conceptual content of a text by establishing associations between terms that occur in similar contexts. For an accessible discussion on issues around latent semantic analysis and semantic indexing, visit the Wikipedia article “Latent Semantic Analysis” here https://en.wikipedia.org/wiki/Latent_semantic_analysis#Latent_semantic_indexing. In order to understand better the role of semantic indexing in large generative models, see for example https://www.sikich.com/insight/semantic-indexes-the-secret-sauce-for-supercharging-generative-ai-performance/. The only difference, she says, is embodiment. The fact that our body mediates between our mind and the world. This is not a minor thing, of course. LLMs don’t have that, and unless they have it, probably they won’t ever reach the level of human capacity for Artificial General Intelligence (AGI). * Artificial General Intelligence (AGI) is a type of AI that can understand, learn, and apply knowledge across a wide range of tasks at a level comparable to human intelligence. This pursuit is at the core of the research efforts of big AI companies like OpenAI and Microsoft. In this regard, a seminal research report was published in March 2023, based on experiments with the model ChatGPT 4. See Bubeck et al., "Sparks of Artificial General Intelligence: Early experiments with GPT-4." arXiv preprint arXiv:2303.12712 However, for K. Hayles, it seems that the rest of it is a shared process between humans and AI models, therefore, intuition could be seen as an emergent property of AI models. Of course, the fact that we might share some cognitive mechanisms with AI doesn’t make it literally intuitive, sentient, or alive in a human form. * The problem, as Margaret Boden says, comes with the use of the verb is (from the verb to be in English), as it would equate these systems in some way with humans, opening the door to deeper ethical issues. If AI models are, then they are subjects of rights, ownership, etc. See chapter 11 in Boden, The creative mind: myths and mechanisms. As of now, there is no evidence of sentience or self-awareness in them, despite some recent claims. * “Blake Lemoine on Whether Google’s AI Has Come to Life,” ‘Futurism’, accessed Oct. 30, 2024. Boden proposes that AI is seemingly creative or seemingly intuitive. It appears to be so. And appears to do it very well.

Ultimately, stating that AI is intuitive is a bold claim and remains a somewhat unclear and contentious area to explore; however, as we might see from above, intuition and AI may be more closely related than one might initially think. For now, still, I prefer to leave it as an open question; I will delve deeper into these and other related issues surrounding AI in the section ‘Composition and gen-AI.’

Intuitive Computers / Rational Composers

The subtitle of the project, Intuitive Computers / Rational Composers, is intentionally provocative, as it proposes a subverted perception of the typical creative process involving computers: it is normally the computer the agent that applies the rules and does all the calculations, and it is the composer who brings its own intuition and non-linear thought to infuse these algorithmic and hyper formalized outcomes with a spark of life or added interest –a notion I discussed extensively in the piece Versificator – Render 3. However, here the equation is reversed: the computer takes on the role of an intuitive agent, while the composer uses logical rules to shape and refine the computer’s intuitive outputs. What does this mean?

In the next piece –or more precisely, the cycle of pieces– a significant part of my creative investigation revolved around a suite of CAC tools that integrate a feedforward neural network with a constraint-based compositional framework. The system combines the predictive capabilities of neural networks trained on symbolic musical data with a backtracking constraint solver, allowing the integration of inferential –intuitive? and rule-based –rational? approaches in a compositional workflow. I named this suite of tools NeuralConstraints. The development of this library became a central focus of my research in the later stages, requiring substantial effort on its technical implementation, hence the relevance of the subtitle.

In what comes next, however, I will briefly outline its role in my creative process and focus on the creative use of it in the pieces. A longer description of NeuralConstraints can be found in the chapter ‘Contributions/Conclusions?.’ Additionally, the technical explanations and tests included to further demonstrate the library’s capabilities have been published elsewhere as a scientific journal article. * Vassallo, Juan S. et al. "Neuralconstraints: Integrating a Neural Generative Model with Constraint-Based Composition." Frontiers in Computer Science, vol. 7, 2025, https://doi.org/10.3389/fcomp.2025.1543074 But before that, I will discuss the motivation and conceptual backdrop behind the piece Oscillations.